So in my previous post, I demonstrated a program called a harmonic engram mapper that identifies the locations of the specific weights in a multi-layer perceptron neural net that contribute most strongly to the formation of a specific trained memory. In essence, these weights are the location of the memory or engram. The program worked but I have to admit, when all was said and done, I was a little underwhelmed. It felt like a solution in search of a problem. Then yesterday, I got to thinking: I know which weights are important; I therefore also know which weights are NOT important. I realized this might form the basis for a neural pruning scheme in a neural net.

Neural pruning is the process of shedding redundant synaptic connections in biological neural tissue and incites large efficiency boosts in artificial systems. It takes less computational power because fewer weights are being factored into calculations.

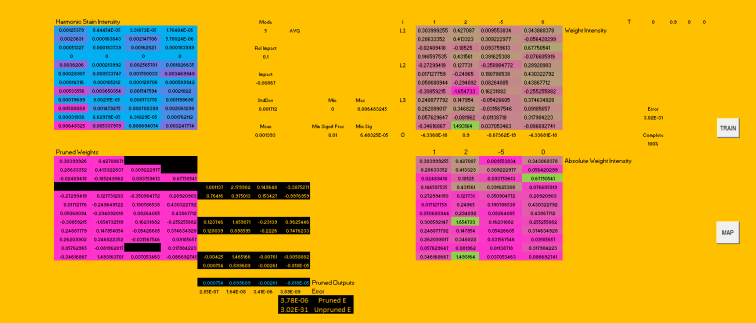

So I modified my program to create a duplicate of the feed-forward portion of the 3 layer MLP in my harmonic mapper program and used spreadsheet IF statements to zero any weights that fell below a certain threshold of importance according to the harmonic mapper. As was the case last time, the NN was trained off a single example. Here is the program:

Closer view:

In this test, the spreadsheet was set up to zero weights that have a harmonic stain intensity that falls below 1% of the maximum intensity. As you can see in the black box in the lower right, error does increase after pruning but it’s not horrible. I suspect with a wider neural net, it might be quite acceptable but I don’t know for sure. I’d like to implement this algorithm on a larger scale using TensorFlow when I get the time.